Introduction

It’s been a while since last time, when I promised a general method for depth reconstruction regardless of projection type. I had told myself to do it “soon”. Due to lack of time partly caused by moving to Ghent and other random occurrences “soon” changed into “sooner or later” and finally we’ve arrived at “later”, but here we are!

As a small disclaimer, implementing such a general case only makes sense if you need to support different projection types and can’t provide separate shaders for each. Bespoke implementations are obviously faster, especially in the case of orthographic projections.

For what follows I will continue to use excruciatingly slow step-by-step derivations.

The orthographic case

For completion’s sake, I’ll show position reconstruction for orthographic-only projections. This is considerably easier compared to perspective projections. After all, the value stored in the depth buffer is a value that maps linearly to the near and far plane. As a result, reconstructing the view-space ![]() value is therefore a simple linear interpolation between the two:

value is therefore a simple linear interpolation between the two:

![]()

Recall that in DirectX, ![]() is simply the depth buffer value, while in OpenGL it’s

is simply the depth buffer value, while in OpenGL it’s ![]() . The complete position can be similarly reconstructed:

. The complete position can be similarly reconstructed:

![]()

![]() and

and ![]() are the positions on the near and far plane for the current pixel being shaded. They are calculated by bilinearly interpolating between the near and far frustum corners. By simply passing the frustum corners from the vertex shader to the fragment shaders as interpolated values, this is handled by the hardware in the same way we interpolated the frustum direction

are the positions on the near and far plane for the current pixel being shaded. They are calculated by bilinearly interpolating between the near and far frustum corners. By simply passing the frustum corners from the vertex shader to the fragment shaders as interpolated values, this is handled by the hardware in the same way we interpolated the frustum direction ![]() in the previous post. The corners themselves are the 8 different combinations of

in the previous post. The corners themselves are the 8 different combinations of ![]() .

.

Reconstructing z: Generalization

Reconstruction the view-space ![]() value for the generalization doesn’t change much compared to the perspective projection-only version, except that we can’t make the same assumptions concerning the projection matrix;

value for the generalization doesn’t change much compared to the perspective projection-only version, except that we can’t make the same assumptions concerning the projection matrix; ![]() and

and ![]() are not 0 for orthographic projections. So we’re stuck with this:

are not 0 for orthographic projections. So we’re stuck with this:

![]()

Review the previous post if you’re hazy on why.

Calculating the position from the z-value: Generalization

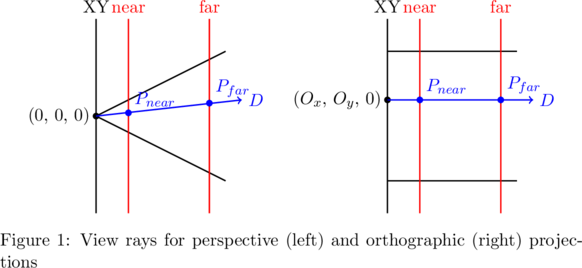

In the perspective-only case, we reconstructed the position value assuming the ray origin was 0. This is no longer the case for orthographic projections, as you can see below:

Evidently this means we’re going to have to use the full ray equation. We’ll have to define a ray origin point which we’ll keep on the ![]() -plane going through the origin to remain compatible with the origin used in the perspective version. We’ll call this point

-plane going through the origin to remain compatible with the origin used in the perspective version. We’ll call this point ![]() , with

, with ![]() . For both cases, we define the ray to be:

. For both cases, we define the ray to be:

![]()

Assuming nothing, we need to calculate ![]() . The line coinciding with the ray can be expressed as

. The line coinciding with the ray can be expressed as ![]() using a different origin, one whose value we know. Let’s pick the near position

using a different origin, one whose value we know. Let’s pick the near position ![]() .

. ![]() is simply that line’s intersection with the

is simply that line’s intersection with the ![]() plane, found by solving for

plane, found by solving for ![]() :

:

![]()

Plugging ![]() back into the line equation

back into the line equation ![]() , we get

, we get ![]() :

:

![]()

Remember ![]() ? We defined this as

? We defined this as ![]() , the z-normalized view vector. Armed with a ray origin, we can redo the same math as before. We’re interested in the point on the ray that is the view position, ie:

, the z-normalized view vector. Armed with a ray origin, we can redo the same math as before. We’re interested in the point on the ray that is the view position, ie: ![]() .

.

![]()

Using the fact that ![]() . Plugging

. Plugging ![]() back into the ray equation:

back into the ray equation:

![]()

I’ve taken the long way round showing what you probably already figured intuitively: it’s the same as the perspective case, but simply taking into account the origin vector.

Similarly to both the bespoke orthogonal and the perspective cases, ![]() and

and ![]() are to be precomputed for each corner of the quad that’s being rendered. The vertex shader can then simply pass them along to the fragment shader so they’re automatically interpolated for the pixel we’re currently operating on.

are to be precomputed for each corner of the quad that’s being rendered. The vertex shader can then simply pass them along to the fragment shader so they’re automatically interpolated for the pixel we’re currently operating on.

Calculating the view vectors

All that rests us to do is to calculate the z-normalized view vectors ![]() for each quad corner. This is simply done by calculating the near and far frustum corners and z-normalizing the difference. The corners in view space are handled entirely the same as we did last time: unprojecting NDC coordinates. The following code shows this, and also performs the ray origin calculations.

for each quad corner. This is simply done by calculating the near and far frustum corners and z-normalizing the difference. The corners in view space are handled entirely the same as we did last time: unprojecting NDC coordinates. The following code shows this, and also performs the ray origin calculations.

// This time it does matter since we're using the unprojection position for the origin calculation.

Vector3D nearHomogenousCorners[4] = {

Vector3D(-1.0f, -1.0f, 0.0f, 1.0f),

Vector3D(1.0f, -1.0f, 0.0f, 1.0f),

Vector3D(1.0f, 1.0f, 0.0f, 1.0f),

Vector3D(-1.0f, 1.0f, 0.0f, 1.0f)

};

Vector3D farHomogenousCorners[4] = {

Vector3D(-1.0f, -1.0f, 1.0f, 1.0f),

Vector3D(1.0f, -1.0f, 1.0f, 1.0f),

Vector3D(1.0f, 1.0f, 1.0f, 1.0f),

Vector3D(-1.0f, 1.0f, 1.0f, 1.0f)

};

Matrix3D inverseProjection = projectionMatrix.Inverse();

Vector3D rays[4];

Vector3D origins[4];

for (unsigned int i = 0; i < 4; ++i) {

Vector3D& ray = rays[i];

// unproject the far and near frustum corners from NDC to view space

Vector3D nearPos = inverseProjection * nearHomogenousCorners[i];

Vector3D farPos = inverseProjection * farHomogenousCorners[i];

nearPos /= nearPos.w;

farPos /= farPos.w;

ray = farPos - nearPos;

// z-normalize this vector

ray /= ray.z;

origins[i] = nearPos - ray * nearPos.z;

}

Working in world space

You can simply change to world space reconstruction by transforming both ![]() and

and ![]() to world space, and everything will happen by itself:

to world space, and everything will happen by itself:

![]()

Conclusion

One final note about the ![]() calculation above. Working with only 2 projection types, you may consider to check for the projection type and simply pass in more easily calculated values:

calculation above. Working with only 2 projection types, you may consider to check for the projection type and simply pass in more easily calculated values: ![]() for perspective and the 4 combinations of

for perspective and the 4 combinations of ![]() for orthographic projections. That’s up to you. Personally, unless absolutely necessary, I’ll always prefer an elegant calculation to a horrible if-statement checking for types!

for orthographic projections. That’s up to you. Personally, unless absolutely necessary, I’ll always prefer an elegant calculation to a horrible if-statement checking for types!

So finally, I got this post out. I hope it can be of use to anyone. As always, any questions or suggestions, feel free to drop a line in the comment box.

Hi.

I’ve managed to reach this post as I’ve implemented position reconstruction as described here:

http://mynameismjp.wordpress.com/2010/09/05/position-from-depth-3/

However, it obviously doesn’t work correctly for orthographic projections. Given that I’m drawing light volumes instead of fullscreen quads, I don’t have access to the four interpolated frustum corners. Is there a way to adapt the technique you’ve described to work with perspective and orthographic projections if I only really have access to either the current screen (x, y) position, or the eye-space position of the current fragment in the light volume?

Hi ac7,

Yes, you can still pass in the 4 frustum corners and interpolate manually using projected coordinates (normalized to [0, 1] range) for each vertex in the light volume geometry, then pass it on to the fragment shader that way. For example:

float4 screenSpace = mvpMatrix * vertex;

screenSpace /= screenSpace.w; // [-1, 1] NDC range

screenSpace = screenSpace * .5 + .5; // [0, 1] range

out.frustumVec = frustumTopLeft + (frustumBottomRight – frustumTopLeft) * screenSpace.xy;

There’s some obvious optimizations that can be done by moving constant arithmetic out of the shader (fe: frustumBottomRight – frustumTopLeft can be precomputed), but this should give you an idea.

Hope that helps,

David

Hi, thanks for the reply.

I at least have linear eye-space Z reconstruction working correctly

(and it works correctly regardless of the projection used, which is

great, thanks!). The article encouraged me to go back and revisit the

mathematics of projection. On the platform I’m using, I have very

little in the way of shader debugging capability, so I want to be

sure I’ve translated what you’ve written in the abstract to concrete

shader code. Please pardon the possible stupid questions to follow!

You’ve suggested that I do manual interpolation of the view rays

(and additionally, I think, the origins too). I already have the

texture-space coordinates of the screen-space position of the current

light volume’s vertex. These are already used in my existing shaders

to sample from the g-buffer, so that’s not a problem.

However, given that I’m going to be manually interpolating vectors

rather than letting the vertex shader do it, I’m somewhat confused

as to exactly what I need to pass in to the fragment shader.

Unless I misunderstood, you seemed to be suggesting that I only need to

pass in the top/left and bottom/right vectors of each of the two sets

of four frustum corners. This makes intuitive sense: I can obviously

interpolate between these two corners if I have x/y values in the

range [0.0, 1.0]. If so… Why would the code need to unproject all

eight corners if only four (two near, two far) are really required?

Is this just for the purposes of demonstration?

The approach in the article is different from what you need to do because it’s using the quad vertices that match up with the actual frustum rays (and yes, origins too). In other words, they can just be passed on as they are. Your vertices however, do not match the four corners which is why they need to be interpolated manually in the vertex shader. These are then passed on to the fragment shader, and the GPU will further interpolate them for each fragment.

The 8 corners are unprojected because it’s required if you’re working in world space instead of view space. In your case you’ll also be needing to use bilinear interpolation between 4 rays instead of linear between 2, the example I posted earlier as an example would not work.

Ah, got it, thanks.

For the record, I’m working in view space.

Hello again.

Quick note: Unless I’m mistaken, the function to calculate view rays needs to have the ray Z component flipped if working in a system with a right-handed view space (most OpenGL systems, I’d imagine):

Otherwise the resulting view rays will point towards positive Z (out of the scene rather than into it).

Hi ac7,

Hm, not really, I’d expect the linear z calculated from depth would already have the correct sign, so when multiplied with the ray (having z == +1), it will point the right way.

Cheers,

David

Ah yes, of course… Multiplying a negative linear Z value by a negative-Z pointing ray is going to result in a positive-Z pointing ray.

Hi again. One last one, I think, as I’ve implemented the system you’ve described and it works flawlessly, great work!

I notice that during the unprojection of the frustum corners, you do this:

...

nearPos /= nearPos.w;

My understanding is that view-space coordinates are transformed to clip-space by multiplication by the projection matrix, and then transformed to normalized-device space by division by their own w components.

Given that the near/far corners are specified in normalized-device space, I’m not sure I understand how a multiplication by the inverse of the projection matrix followed by a division by w gets one back to view-space from normalized-device space. Obviously if the coordinates were specified as clip-space coordinates, the inverse projection matrix would transform them to view-space, but…

It’s easy to get confused there. It’s probably a good idea to read up on homogeneous coordinates in that case. The thing is that we’re working with 4D coordinates, in which a scalar multiples are equivalent (ie: P === s*P), in other words representing the same point. Projections work with homogeneous coordinates, so unprojecting a point from NDC (with w == 1) and dividing the result by w represents the same point as if we had unprojected the original homogeneous coordinate we projected in the first place to obtain said NDC.

In other words, if the original projection happened like this:

p_hom = Proj * p_view

p_ndc = p_hom / p_hom.w

p_ndc and p_hom represent the same 3D point in homogeneous space, since they’re a scalar multiple of eachother.

Then naturally, you would expect the unprojection to be:

p_hom = p_ndc * p_hom.w; // but at this point, we don’t know p_hom.w

p_view = Unproj * p_hom

So to solve this, we simply use an equivalent point we DO know (ie, p_ndc)

p_view_hom = Unproj * p_ndc // in this case, p_view_hom.w will no longer be 1, so we divide to obtain regular 3D coordinates representing the same point.

p_view = p_view_hom / p_view_hom.w

Hope that helps!

Yes, makes complete sense. Thanks very much!

Pingback: Unprojections Explained | Der Schmale - David Lenaerts's blog

Glad it helped! If you’re interested, I decided to elaborate here: https://www.derschmale.com/2014/09/28/unprojections-explained/

Thanks for the article. This page helped verify my math Unprojecting and inverted depth buffer. Just wanted to say thanks!