Introduction

[edit] To start things off more easily, I decided to limit this post to perspective projections and move on to the generalization (including orthographic projections) in a next blog post.

When doing deferred shading or some post-processing effects, we’ll need the 3D position of the pixel we’re currently shading at some point. Rather than waste memory and bandwidth by storing the position vectors explicitly in a render target, the position can be reconstructed cheaply from the depth buffer alone. This is data we already have at our disposal. The techniques to do so are pretty commonplace, so this article will hardly be a major revelation. So why bother writing it at all? Well…

- Too often, you’ll stumble over code keeping a position render target anyway.

- Often, articles explaining the technique are not entry-level and skip over derivations, making it hard for beginners to figure things out.

- Many aspects of the implementation are scattered across many articles and forum posts. I’d like a single comprehensive article.

- It somewhat made a relatively unexplained appearance in the previous post’s sample code, so I figured I might as well elaborate.

- The therapeutic value of writing? ;)

Since I’m trying to keep it at an entry level, the math will have a slightly step-by-step approach. Sorry if that’s too slow :)

Note: I remember an article by Crytek briefly mentioning similar material, but I can’t seem to find it anymore. If anyone can point me to it, let me know!

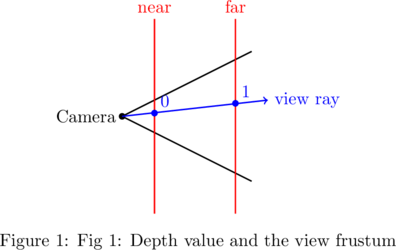

Non-linearity

So as you already (should) know, the depth buffer contains a range between 0 and 1, representing the depth on the near plane and far plane respectively. A ray goes from the camera through the “screen” into the world, and the depth defines where exactly on the ray that lies. If you’ve never touched the depth buffer before, you might be tempted to simply linearly interpolate between where that ray intersects the near and far planes. However, the depth buffer’s depth values are not necessarily linear (perspective projections), so there goes that idea.

Often, linear depth is stored explicitly in a render target to make this approach possible. Depending on your case, this might be a valuable option. If you expect to sample the depth along with the normals most of the times, you could throw it in there and have all the data in one texture fetch. This is, of course, provided you’re using enough precision in your render target which you might not want to spend on your normals.

Reconstructing z

So, it’s obvious we’re going to need to convert the depth buffer’s value to a linear depth representation. Instead of converting to another [0, 1]-based range, we’ll calculate the view position’s z value directly instead. As we’ll see later on, we can use this to very cheaply reconstruct the whole position vector. To do so, remember what the depth buffer’s value contains. In the vertex shader, we projected our vertices to homogeneous (clip) coordinates. These are eventually converted to normalized device coordinates (NDC) by the gpu by dividing the whole vector with its w component. The NDC coordinates essentially result in XY coordinates from -1 to 1 which can be mapped to screen coordinates, and a Z coordinate that is used to compare with and store in the depth buffer. It’s this value that we want. In other words, given projection matrix ![]() and view space position

and view space position ![]() :

:

![]()

Writing this out in full for ![]() and

and ![]() :

:

![]()

![]()

Here, we assumed a regular projection matrix where the clip planes are parallel to the screen plane. No wonky oblique near planes! This means ![]() .

. ![]() is a regular point, hence

is a regular point, hence ![]() .

.

Solving for ![]() :

:

![]()

If you know you’ll have a perspective projection, you can optimize by entering the values for ![]() and

and ![]() .

.

For DirectX (![]() and

and ![]() ):

):

![]()

For OpenGL (![]() and

and ![]() ):

):

![]()

If you’re using DirectX, ![]() is simply the depth buffer value. OpenGL uses the convention that

is simply the depth buffer value. OpenGL uses the convention that ![]() ranges from -1 to 1, so

ranges from -1 to 1, so ![]() .

.

Depending on your use case, you may want to precalculate this value into a lookup texture rather than performing the calculation for every shader that needs it.

Calculating the position from the z-value for perspective matrices

Now that we have the z coordinate of the position, we basically have everything we need to construct the position. For perspective projections, this is very simple. We know the point is somewhere on the view ray with direction ![]() (in view space, with origin = 0). For now, we assume nothing about

(in view space, with origin = 0). For now, we assume nothing about ![]() (it’s not necessarily normalized or anything). We solve for

(it’s not necessarily normalized or anything). We solve for ![]() using

using ![]() , the only component we know everything about, and substitute.

, the only component we know everything about, and substitute.

![]()

(Yes, I hear you sighing, this is a simple intersection test.)

With this formula, we can make an optimization by introducing a constraint on ![]() . If we resize D so that

. If we resize D so that ![]() (let’s call this a z-normalization), then things simplify:

(let’s call this a z-normalization), then things simplify:

![]()

We can precalculate ![]() for each screen corner and pass it into the vertex shader. The vertex shader in turn can pass it on to the fragment shader as an interpolated value. Since interpolation is linear, we’ll always get a correct view ray with

for each screen corner and pass it into the vertex shader. The vertex shader in turn can pass it on to the fragment shader as an interpolated value. Since interpolation is linear, we’ll always get a correct view ray with ![]() . This way, the reconstruction happens with a single multiplication! Using a compute shader as with the HBAO example, the interpolation has to be performed manually.

. This way, the reconstruction happens with a single multiplication! Using a compute shader as with the HBAO example, the interpolation has to be performed manually.

Calculating the view vectors

There’s various ways to go about calculating the view vectors for a perspective projection. Since it’s only done once every time the projection properties change it’s not exactly a performance-critical piece of code. I’ll go for what I consider the ‘neatest’ way. Since we use the projection matrix to map from view-space points to homogeneous coordinates and convert those to NDC, we can invert the process to go from NDC to view-space coordinates. The view directions for every quad corner correspond to the edges of the frustum linking near plane corners to far plane corners. These are simply formed by the NDC extents -1.0 and 1.0 (0.0 and 1.0 for z in DirectX), since the frustum in NDC forms the normal cube.

Edit: More on unprojections here.

// but it doesn't matter since any z value lies on the same ray anyway.

Vector3D homogenousCorners[4] = {

Vector3D(-1.0f, -1.0f, 0.0f, 1.0f),

Vector3D(1.0f, -1.0f, 0.0f, 1.0f),

Vector3D(1.0f, 1.0f, 0.0f, 1.0f),

Vector3D(-1.0f, 1.0f, 0.0f, 1.0f)

};

Matrix3D inverseProjection = projectionMatrix.Inverse();

Vector3D rays[4];

for (unsigned int i = 0; i < 4; ++i) {

Vector3D& ray = rays[i];

// unproject the frustum corner from NDC to view space

ray = inverseProjection * homogenousCorners[i];

ray /= ray.w;

// z-normalize this vector

ray /= ray.z;

}

Pass the rays into the vertex shader, either as a constant buffer using vertex IDs or as a vertex attribute and Bob’s your uncle!

Working in world space

If you want to perform your lighting or whatever in world space, you can simply transform the z-normalized view rays to world space and add the camera position. No need to perform matrix calculations in your fragment shader.

![]()

Conclusion

There we have it! I think it should be straightforward enough to implement this in a shader. If not, let me know and I shall have to expand on this. Just stop storing your position vectors now, mkay? :)

Very cool ! Thanks for the post, very useful.

Hey David,

Your article was just what I needed to gain a good understanding in how to reconstruct my vertex position in my webgl deferred render pipeline.

So far I have one question/remark regarding the z depth reconstruction topic, specifically the optimized step. Writing it out in full works like a charm but not the optimized step. I’m pasting the code here so you can have a look at it, perhaps you can spot the problem?

Thanks in advance!

Working code

Not-working code

Hi Dennis,

It looks like your signs are flipped (my view space Z axis is pointing into the screen). Since your sign is flipped for the full version, you’ll also need to do this for the reduced step.

If you simply enter the real values of the matrix into your working full version, you can’t really go wrong :)

Hope that helps!

Hello,

I’m currently trying to implement your HBAO technique to Unity but I ran into some problems while doing so. It seems like I’m not doing something correctly when reconstructing the view-space position from the depth buffer. This is how I do it:

{

// option1

float depth = (depthValue * UNITY_MATRIX_P[3][3] - UNITY_MATRIX_P[3][2]) /

(depthValue * UNITY_MATRIX_P[2][3] - UNITY_MATRIX_P[2][2]);

return depth;

// option2

// float depth = 1000.0F / (depthValue - 0.1F);

// return depth;

}

float GetDepth(float2 uv)

{

// option1

float centerDepth = tex2D(_CameraDepthTexture, pin.UV).r;

float3 centerViewPos = pin.FrustumVector.xyz * DepthToViewZ(centerDepth);

return centerDepth;

// option2

// float centerDepth; float3 centerNormal;

// DecodeDepthNormal(tex2Dlod(_CameraDepthNormalsTexture, float4(pin.UV, 0, 0)), centerDepth, centerNormal);

// return centerDepth;

}

float frag (FragmentIn pin) : SV_TARGET

{

float centerDepth = tex2D(_CameraDepthTexture, pin.UV).r;

float3 centerViewPos = pin.FrustumVector.xyz * DepthToViewZ(centerDepth);

float2 frustumDiff = float2(_ViewFrustumVector2.x - _ViewFrustumVector3.x, _ViewFrustumVector0.y - _ViewFrustumVector3.y);

float w = centerViewPos.z * UNITY_MATRIX_P[2][3] + UNITY_MATRIX_P[3][3];

float2 projectedRadii = _HalfSampleRadius * float2(UNITY_MATRIX_P[1][1], UNITY_MATRIX_P[2][2]) / w;

float screenRadius = projectedRadii.x * _ScreenParams.x;

if (screenRadius < 1.0F)

{

return 1.0F;

}

// continue...

}

Now the problem is that if I use the option1 I seem to get the correct result (if I directly output the view-space position) but then when I scale the sample radius I get a wrong result so I never go past

{

return 1.0F;

}

In the contrary, if I use the option2 I get a wrong result but at least the screenRadius value is correct. Now as you said above, I suspect my problem may be that the view-space Z axis direction is flipped but I can’t figure out how to fix this under Unity.

centerViewPos output option1:

http://i57.tinypic.com/2dc65xl.png

centerViewPos output option2:

http://i58.tinypic.com/erhok3.png

Thanks in advance, and awesome implementation by the way!

Cheers

Hi Micael,

Option 1 doesn’t look correct to me either; the x-value increases vertically instead of horizontally, similarly for the y-value. I’m not too familiar with Unity’s internal representation (for shame, I know, it’s on my to-do list). Is it possible that the matrices are stored in a different major order, so [2][3] would need to be [3][2]?

Fingers crossed!

Hi David,

I tried to mess around with the projection matrix but I don’t think it’s the source of the issue, at least it’s not related to the major order. Unity uses a column-major order, can you confirm if you’re using the same? At this point I’m still trying to build the view-space position but I don’t really have a running example to refer to so it’s kinda difficult. I’m currently using the same method as in the HBAO shader exemple to rebuild the view-space position (interpolated frustum points) but the position I get seem to be projected on the wrong z axis. I searched around to see what a view-space position output should look like and added a function that flips the vector on the z axis to get the same output.

{

float3 flipped;

flipped.x = -position.y;

flipped.y = -position.x;

flipped.z = -position.z;

return flipped;

}

And the before/after:

http://i57.tinypic.com/j74uwi.png

And this is my projection matrix:

0.00000 1.73205 0.00000 0.00000

0.00000 0.00000 -1.00010 -0.10001

0.00000 0.00000 -1.00000 0.00000

And (unfortunately) the final shader result:

http://i57.tinypic.com/2m5jm8w.png

So what would help me a lot if it’s not too much to ask is if you could print out your view-space position buffer (centerViewPos) and the result of the tangent in GetRayOcclusion using something like this…

{

float2 texelSizedStep = direction * rcp(_ScreenParams.xy);

float3 tangent = GetViewPosition(origin + texelSizedStep, frustumDiff) - centerViewPos;

return tangent;

}

Just so I can get an idea of the result I’m aiming for. It’s also the first time I implement a shader in Unity (I used to work with glsl) so I’m kinda learning along the way, I just feel like Unity could use a much better ambient occlusion solution since the current one is pretty average.

Thank you for your time

Cheers

Hey Micael,

Unfortunately, I don’t have much time on my hands at the moment to hack the debug code into the shaders, but I did some quick researching about Unity. It looks like it uses the same axes orientation as my code, so if you need to swizzle axis coordinates nor invert the z-axis. Something else definitely seems wrong (like the order of the frustum vectors which in this case is clockwise, top-left first).

I’ve also found that Unity already provides some functionality to get the view space depth, using Linear01Depth in conjunction with _ProjectionParams, or LinearEyeDepth (but I can’t find too much info on this online). I would start debug-outputting the interpolated frustum vectors, as well as the linear depth values, see if they’re correct.

Hope that helps!

Hey David,

I’ve taken on a little side project to create a graphics pipeline in HTML 5 (not to make money or anything, I just wanted something difficult to develop) . I found myself in a bit of trouble when implementing the z buffer algorithm but your two pages on this topic has really helped with my understanding of what I’m doing wrong.

Thanks!

Pingback: Pre-rendered backgrounds in Unity (2/2) – Rendering | Geometry saves the Earth!

Guessing you were referring to this Crytek presentation (slide 12): http://www.crytek.com/cryengine/presentations/real-time-atmospheric-effects-in-games

Your explanation is more thorough though.

Hi David,

Thank you for the detailed breakdown, it was very helpful. Wanted to make note of an issue I ran into, for anyone else who wants to work in world space.

My mistake was not accounting for camera offset when converting the z-normalized view rays to world space:

“`

…

ray /= ray.z;

// Transform from view to world

ray.w = 1.0;

ray = inverseView * ray;

“`

Obvious in hindsight, but took me a while to realize what I was doing wrong: This doesn’t give a ray direction, just some point in world space near the camera. Simply subtract out the camera position to get a direction from the camera:

“`

ray -= cameraPosition;

“`

*Then* multiply by reconstructed z and add the camera position back in, like you already mentioned.